Privacy through Obscurity

Privacy in the Era of Ubiquitous Cameras and AI

Lately I’ve been thinking about the relationship between embedded vision and privacy. Surveillance cameras are nothing new, of course. For decades, they’ve been ubiquitous in and around restaurants, stores, banks, offices, airports, train stations, etc. In the course of a typical week, I’d guess that my image is captured by dozens of these cameras.

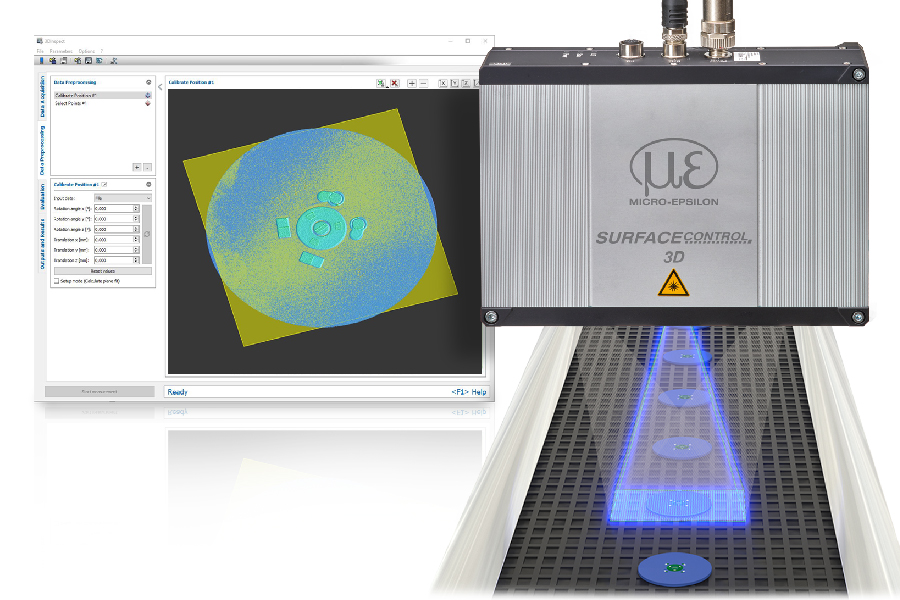

The Netatmo Welcome home security camera incorporates face recognition and allows the owner to configure the device so that video is not recorded when specific people are present. (Figure: Netatmo SAS)

As someone who values privacy, the presence of so many surveillance cameras can be unsettling. But I’ve been comforted by the idea of privacy through obscurity – the knowledge that although in theory a person, company, or government could learn quite a bit about me from video captured by these cameras, in reality no one has been paying attention. The main way that surveillance video is analyzed is by having people watching it, which is feasible only for a minuscule fraction of the video collected. As a result, most surveillance video gets erased without ever being viewed, unless a crime or accident has occurred nearby. But this comfortable situation is changing fast, due to two factors. First, technology advances are making it increasingly practical to use algorithms, rather than people, to evaluate surveillance video. The last few years have seen huge advances in the accuracy of such algorithms, thanks to deep neural network techniques. And, now, the cost and energy required to run these algorithms is quickly shrinking by three to four orders of magnitude, thanks to more efficient algorithms, processors and software tools. Second, the number of deployed cameras is growing fast: 120 million new connected video cameras were deployed in 2016. And in addition to the millions of stand-alone cameras, we are also seeing cameras incorporated into a rapidly expanding range of systems, such as cars, vacuum cleaning robots and augmented reality headsets. iRobot’s CEO recently said that the company is considering selling 3D map data collected by its Roomba vacuum cleaning robots inside customers‘ homes. And in April, Amazon introduced the Echo Look, a hands-free camera and style assistant, which allows users to snap photos and videos of themselves at home to get recommendations on clothing choice (while providing Amazon with images of themselves, their wardrobes, and their homes). According to estimates by Chris Rowen of Cognite Ventures presented at an Embedded Vision Alliance event February, there are already more image sensors in use than there are people on the planet – and the number of image sensors is growing much faster than the number of people.

„It’s increasingly feasible to integrate sophisticated visual understanding algorithms into cameras themselves, rather than sending video to the cloud for analysis.“ – Jeff Bier, Embedded Vision Alliance (Figure: Embedded Vision Alliance)

So the world of connected video cameras is changing in big ways: There are many more cameras in many more places. Increasingly, algorithms are able to extract useful information from these cameras. And, of course, cameras connected to the cloud mean that algorithms can analyze video not only from individual cameras, but from groups of cameras – providing the ability to track people or vehicles, for example, as they move from place to place. All of this is beginning to sound more than a bit scary from a privacy perspective. But there’s some good news as well. As I discussed in a recent column, it’s increasingly feasible to integrate sophisticated visual understanding algorithms into cameras themselves, rather than sending video to the cloud for analysis. Not only is video analysis at the edge possible, it’s increasingly necessary for economic and technical reasons.

This opens up a wonderful opportunity to enhance privacy. When devices themselves incorporate vision algorithms, device designers can limit the amount of video and other data that these devices transmit – and, crucially, they can offer users the ability to set their own limits. An excellent example of this is the Netatmo Welcome home security camera. This clever device allows incorporates face recognition and allows the owner to configure the device so that video is not recorded when specific people (typically family members) are present. So, somewhat counter-intuitively, improved vision algorithms and processors can actually enhance privacy, even as cameras proliferate into private spaces. But improved privacy won’t happen automatically. If we as consumers want it, we’ll have to demand it. Companies and product developers will then find that they are increasingly able to deliver it. If you’re developing vision algorithms, systems or applications, you’ll want to attend the Embedded Vision Summit, May 22-24, 2018 in Santa Clara California – the preeminent conference for people who are creating products with visual perception. For details, visit the event web page.